Learn why effective AI red teaming must go beyond model attacks to focus on securing the entire application against real-world threats.

Fergal Glynn

Prompt injection attacks are among the most common and damaging attacks against large language models (LLMs). The OWASP GenAI Security Project ranks prompt injection as the most serious threat to generative AI, and these threats are only increasing.

Prompt injections are successful because they exploit the very foundation of how LLMs answer user questions. Because of that, it can be very tricky to detect these attacks, much less prevent and mitigate them.

Fortunately, a proactive, layered approach helps organizations better detect these threats. In this guide, you’ll learn which prompt injection detection strategies work best against today’s 24/7 threats.

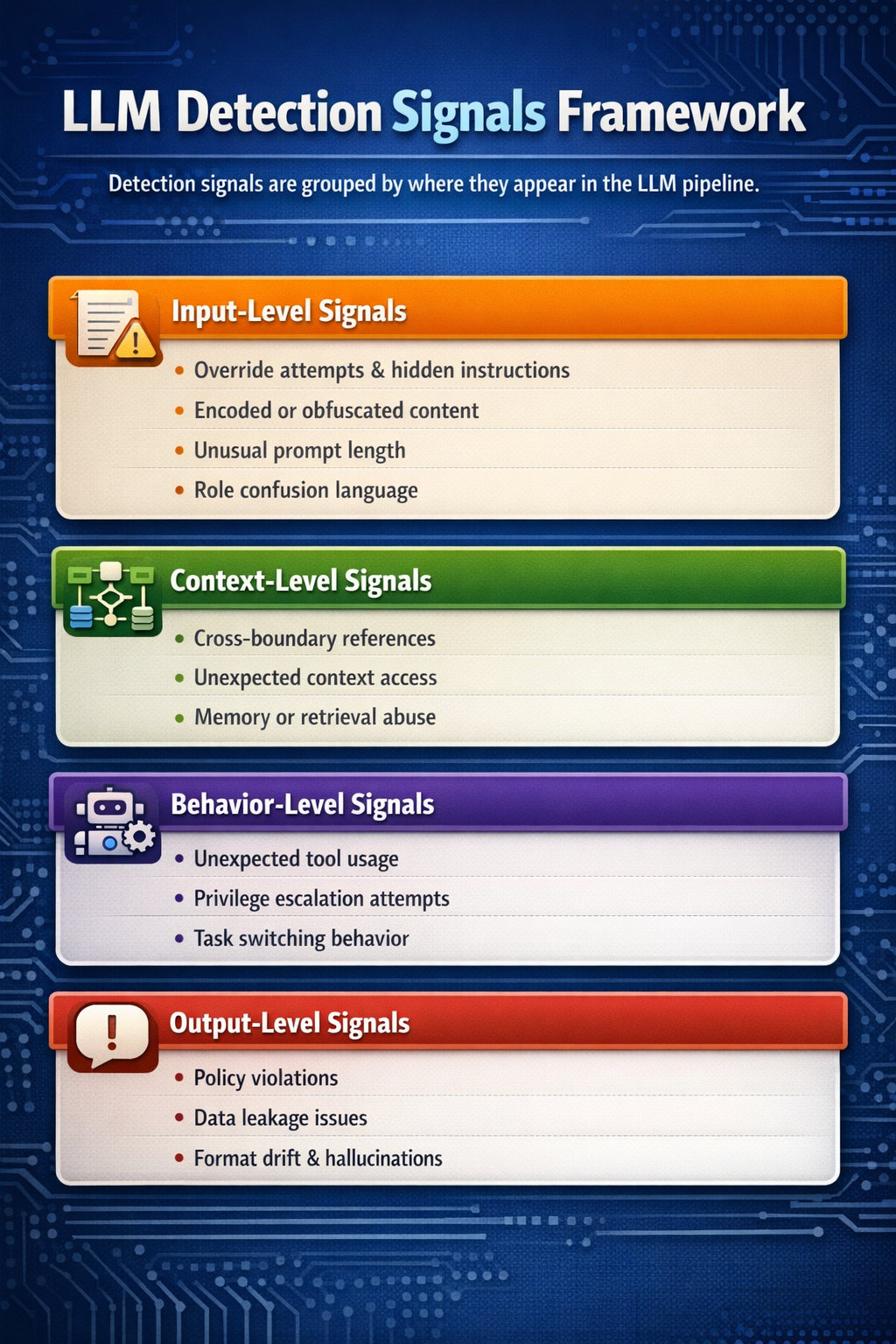

Prompt injection rarely shows up as one obvious red flag. It shows up as signals across the prompt, context, behavior, and output. A useful detection framework groups those signals by where they appear in the LLM pipeline.

Below is a practical way to think about prompt injection detection signals.

These signals appear directly in the user prompt or external content:

These signals emerge from how the prompt interacts with system context and memory:

These signals show up in how the model acts:

These signals appear in the model’s responses:

The best detection methods help identify abnormal behavior, policy violations, and suspicious inputs long before they become real-world risks. Layer these methods to create a comprehensive defense against prompt injection attacks.

Least privilege rules are a best practice for any digital platform, including LLMs. Always tightly scope what your model, tools, or plugins can access.

This approach simplifies detection by making abnormal or out-of-policy requests immediately stand out. For example, if an LLM suddenly attempts to call a restricted API, you can identify that as an attack with a high degree of confidence.

Prompt injections happen when your system trusts inputs that it shouldn’t. That’s why input validation is so critical. Enforce strict schemas and only accept allowed formats so the LLM can flag any user attempts to override system instructions.

Sanitization also helps. This process strips control tokens and isolates user text from trusted system prompts, reducing the likelihood that a user can change the LLM’s behavior.

It’s just as important to monitor an LLM’s outputs as its inputs. Sometimes prompt injection attacks reveal themselves at the output stage, where the model generates unsafe content or other odd deviations from scope.

Create output validation layers to compare the LLM’s responses against your safety policies and allowlists. Most monitoring solutions can do this in real time, helping you mitigate prompt injections before they cause further damage.

Malicious attackers use creative prompt injection techniques to circumvent your LLM guardrails. The best way to prevent both known and novel prompt injection techniques is to think like these attackers. With AI red teaming, organizations can intentionally stress-test LLMs with adversarial inputs before attackers can do real damage.

Red teams use a variety of techniques, from role-playing to indirect prompt injections, to identify points of failure. Solutions like Mindgard’s Offensive Security conduct red teaming 24/7, helping models create a tighter feedback loop that strengthens security.

While monitoring systems and red teaming automations have their place in your security layers, humans still need to be involved. Human-in-the-loop processes route high-risk inputs or uncertain decisions to trained reviewers before taking action.

This addition helps businesses strike a balance between time-saving security automations and the use of valuable human judgment to catch subtle attacks.

Most teams think they have detection covered. In practice, blind spots emerge fast. Prompt injection exploits those weaknesses.

Here’s where detection usually breaks:

These failures create false confidence. Teams believe they’re protected, but in reality, they’re highly vulnerable.

Mindgard’s AI Security Risk Discovery & Assessment complements Offensive Security by continuously mapping AI risk, validating defenses, and exposing vulnerabilities across prompts, context, tools, and outputs.

The attack surface expands when LLMs move beyond the chat interface. Modern systems connect models to external data, tools, and workflows, so detection must extend beyond prompts and outputs to every layer where instructions can influence behavior.

Prompt injection in these environments rarely appears as a single signal. Instead, it emerges across retrieved content, agent decisions, tool execution, and persistent memory.

Retrieval-augmented generation (RAG) systems introduce a new injection vector: external documents. Malicious instructions can be embedded in retrieved content and disguised as legitimate data.

Detection must inspect retrieved sources alongside user prompts. Signals should flag instruction-like patterns, unexpected directives, or anomalous content within external data.

Agents plan, act, and chain decisions across multiple steps. Prompt injection can subtly redirect goals, alter task logic, or reshape decision paths without triggering obvious errors.

Detection must monitor planning behavior and decision trajectories. Unexpected shifts in goals or tool usage often provide the earliest warning signs.

Tools, plugins, and APIs extend LLM capabilities into real-world systems. As a result, the attack surface expands from text prompts to tool invocation and parameter execution.

Prompt injection frequently targets these interfaces. Adversarial prompts can trigger high-privilege tool calls, manipulate parameters, or redirect agent workflows in ways that bypass traditional safeguards.

Agentic systems introduce even broader attack surfaces beyond traditional tools and APIs. Mindgard identified multiple real-world cases where adversarial inputs manipulated agent behavior, development environments, and external interfaces, including:

Together, these cases show why input monitoring alone is insufficient. Effective detection must analyze tool invocation patterns and agent behavior in real time, not just the content of user prompts.

Memory changes how prompt injection persists. Malicious instructions can survive across sessions, and subtle manipulation can accumulate gradually over time.

Detection must track memory access, updates, and recall patterns. Anomalies in how information is stored or retrieved often signal long-term injection attempts.

Prompt injection attacks are becoming more prevalent as organizations expand their use of LLMs. Multi-layered detection strategies are no longer optional. They define whether teams can identify real risk before it escalates.

Detection alone is not enough. Teams also need continuous visibility into where models, tools, and workflows are exposed. Mindgard’s AI Security Risk Discovery & Assessment surfaces hidden vulnerabilities across the LLM attack surface, while Mindgard’s Offensive Security stress-tests models under real adversarial conditions.

Together, these capabilities combine automated discovery, red teaming, and risk-driven monitoring across the LLM lifecycle. Identify prompt injection risks before they reach production. Book a Mindgard demo to discover how to continuously pressure-test your AI environment.

Instead of exploiting vulnerabilities, prompt injections target how an LLM interprets and prioritizes instructions. This approach means LLM teams can’t rely solely on code analysis. Prompt injections require visibility into inputs, outputs, and tool usage to detect malicious behavior.

Common signals include attempts to override system instructions, unexpected tool calls, abnormal output formatting, or content that violates policy. A single red flag may be an expected anomaly, but detecting these signals across multiple detection layers indicates that an attack is underway.

Red teaming reveals how real-world attacks bypass controls. These tests help teams to identify weak detection signals and tune monitoring rules. Continuous red teaming ensures your detection strategies evolve alongside new attack techniques.