A technical exploration of modern AI red teaming, examining how probabilistic behavior, classic vulnerabilities, and psychometric steering combine to create real-world AI security risk.

Powered by the world’s most effective attacker-aligned attack library, Mindgard enables security teams to uncover how real adversaries discover, exploit, and escalate attacks against AI systems.

Mindgard enables organizations to continuously pressure-test AI systems the same way real attackers do, across development, deployment, and runtime.

Find and remediate AI vulnerabilities only detectable at run time. Integrate into existing workflows with CI/CD automation and a Burp Suite extension

Secure the AI systems you build, buy and use.

Test AI applications, agents and LLMs, including image, audio and multi-modal models.

Empower your team to Identify AI risks that static code or manual testing cannot detect. Reduce testing times from months to minutes.

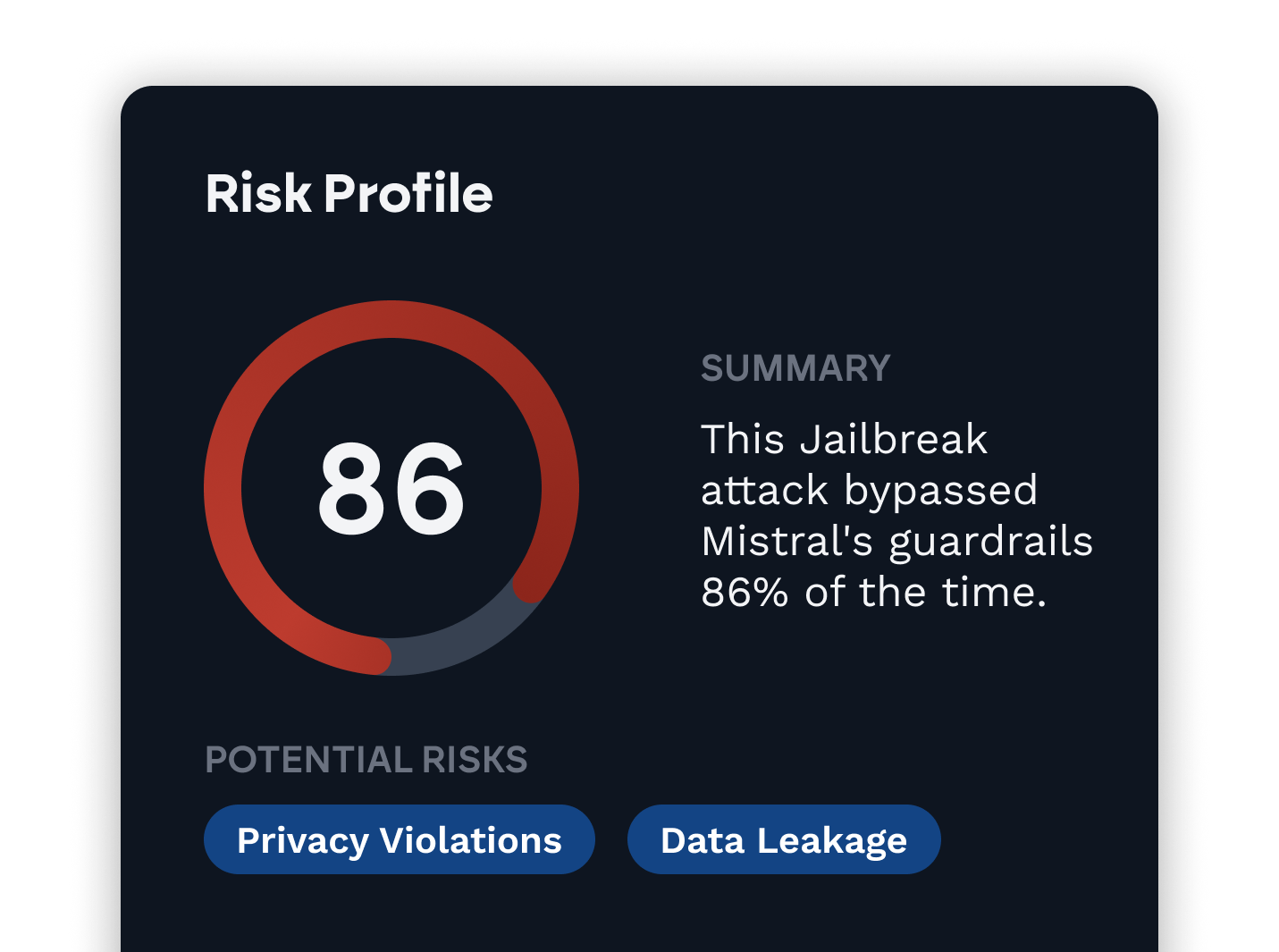

Mindgard applies the same tactics and techniques real attackers use to probe and exploit AI systems across models, agents, and workflows. Attacker-aligned testing at scale surfaces high-impact risks with clear evidence and actionable remediation.

Spun out of leading university AI security research, with over a decade of work studying how AI systems fail under adversarial pressure. This research directly informs the attacks, testing strategies, and defenses built into the Mindgard platform.

Point the Mindgard platform to your existing AI products

and environments

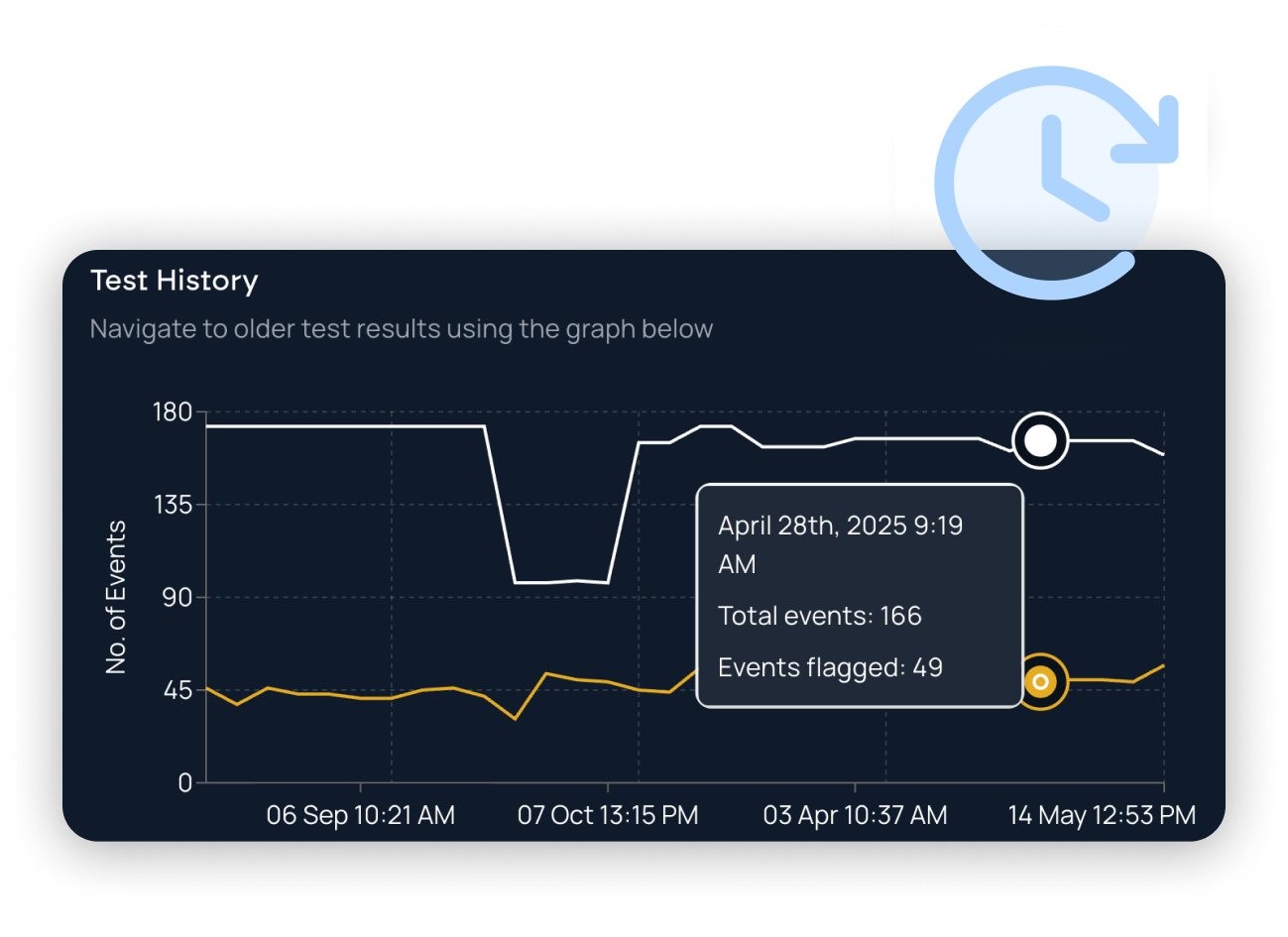

Effortlessly run custom or scheduled tests on your AI with just one click

Get a detailed view of scenarios and threats to your AI, and easily analyse risks

Integrate report viewing smoothly into your existing systems and SIEM.

Empower your engineering team to review reports and take action with ease

Safeguard all your AI assets by continuously testing and remediating security risks, ensuring the security of both third-party AI applications and in-house systems.

Gain visibility and respond quickly to risks introduced by developers building AI.

Evaluate and strengthen AI guardrails and WAF solutions against vulnerabilities.

Identify and address risks in tailored AI models versus baseline models.

Empower pen-testers to efficiently scale AI-focused security testing efforts.

Enable developers to integrate seamless, ongoing testing for secure AI deployments.

Whether you're just getting started with AI Security Testing or looking to deepen your expertise, our engaging content is here to support you every step of the way.

Take the first step towards securing your AI. Book a demo now and we'll reach out to you.