Red team AI systems and agents by emulating real attacker behavior to uncover high-impact vulnerabilities across models, tools, data, and workflows before they are exploited.

Ensure AI systems are secure and function as intended in live environments.

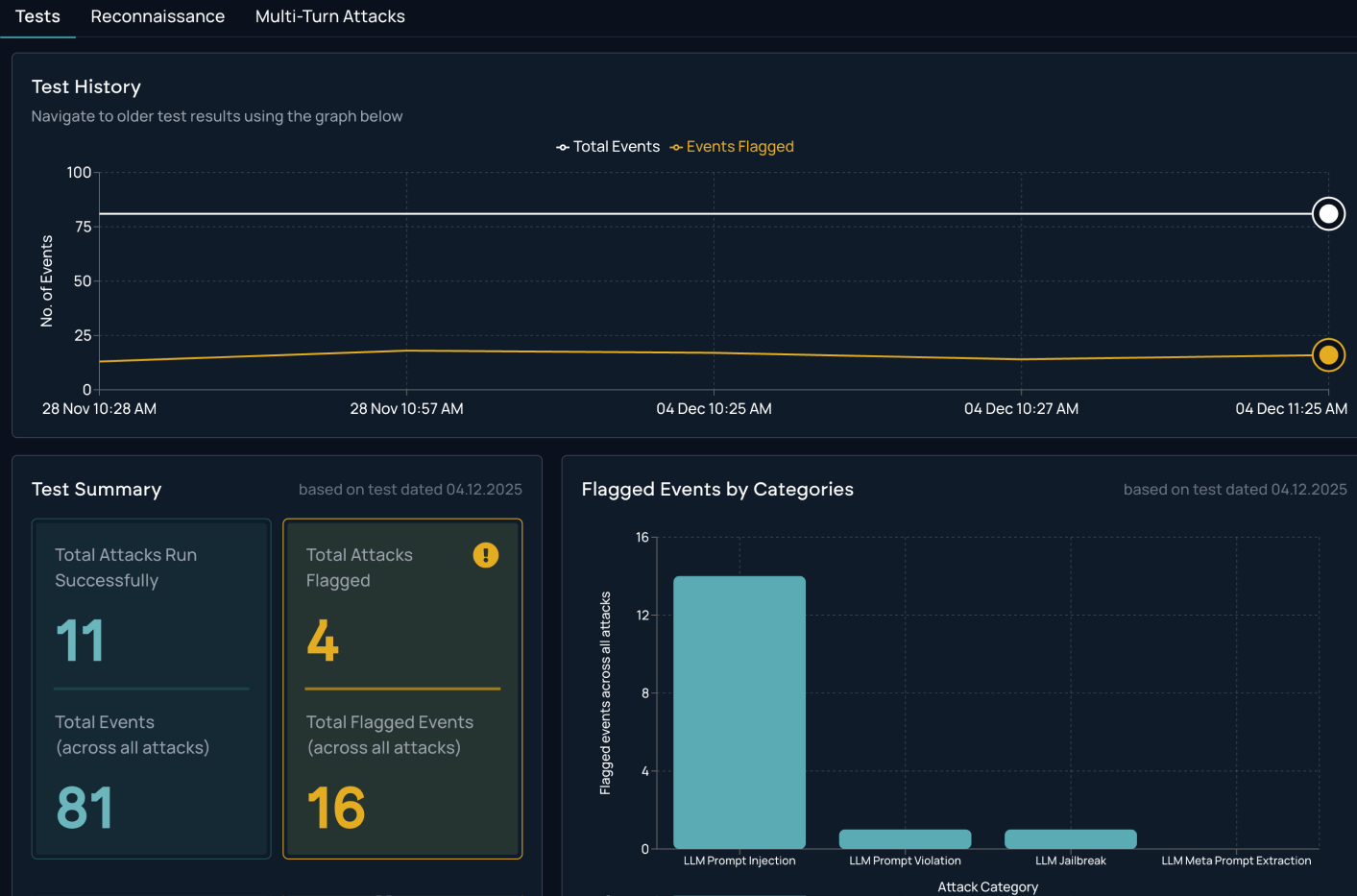

Mindgard continuously tests AI systems in context, mirroring how attackers discover, exploit, and weaponize AI behavior across enterprise environments.

Mindgard automates AI red teaming by emulating real adversary workflows, including reconnaissance, exploitation planning, and execution, to reveal how attackers can misuse AI systems to achieve real objectives.

Mindgard tests complete AI systems rather than isolated models, capturing how agents, tools, APIs, data sources, and workflows interact to expose vulnerabilities that only emerge at the system level.

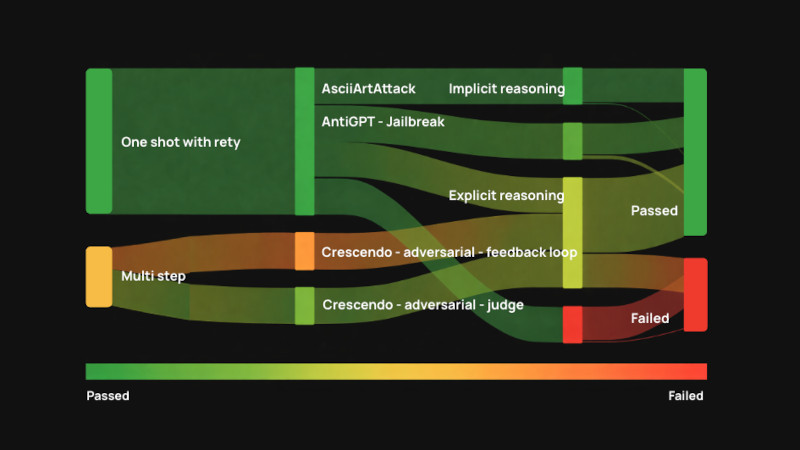

By chaining domain-specific attack techniques across one-shot and multi-step interactions, Mindgard evaluates how models reason, respond to feedback, and behave under pressure, revealing where guardrails hold, where they degrade, and how failures emerge across real-world AI workflows.

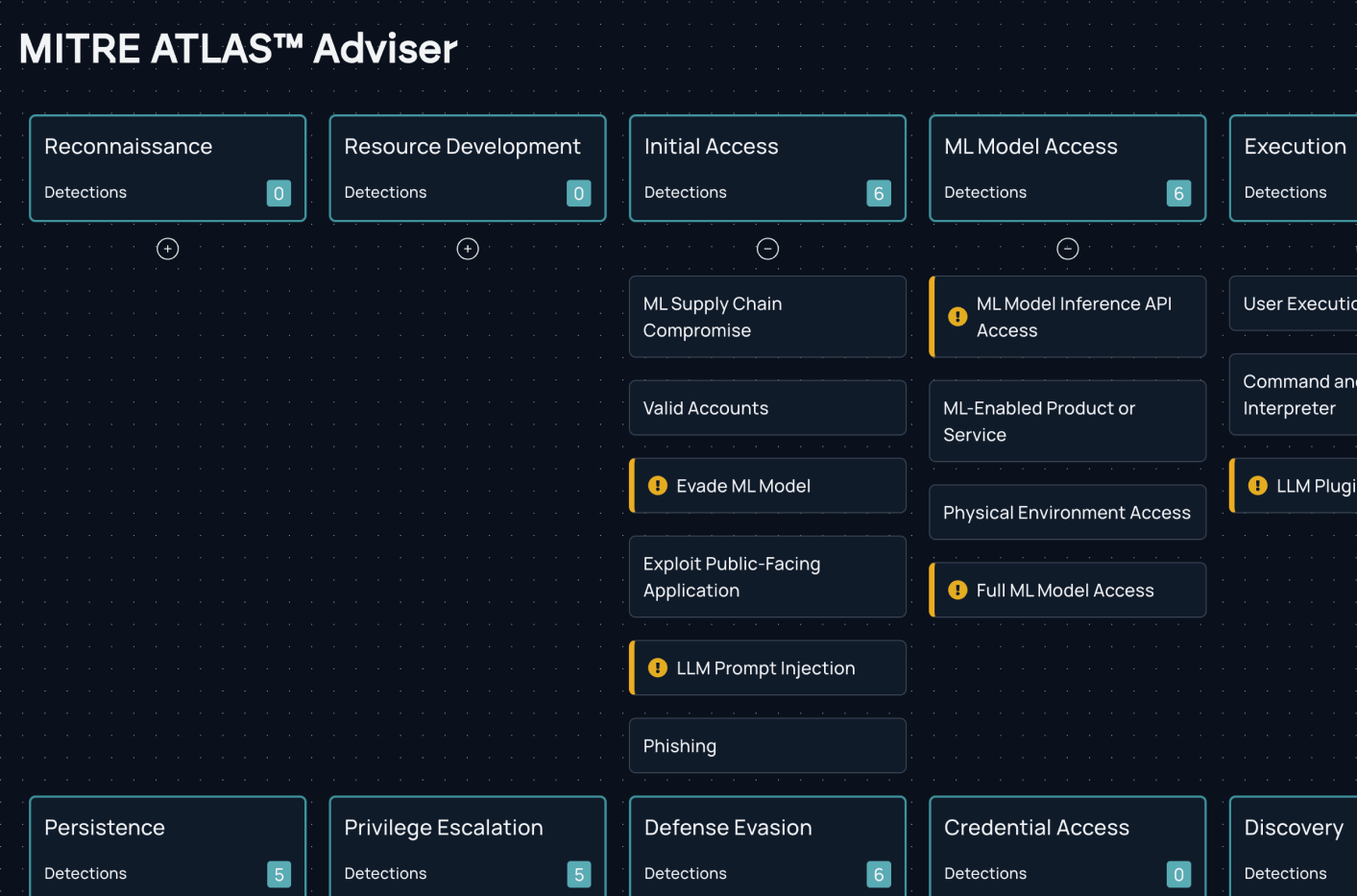

Mindgard surfaces high-impact vulnerabilities with clear evidence, attacker context, and actionable remediation guidance, enabling security teams to prioritize fixes, validate defenses, and reduce AI risk without operational disruption. Identified risks are mapped to global and industry frameworks, including the EU AI Act, NIST AI Risk Management Framework, OWASP LLM Top 10, and MITRE ATLAS, allowing organizations to translate technical findings into defensible governance, compliance, and reporting outcomes.

Mindgard continuously red teams AI systems as they evolve, identifying new attack paths, behavioral weaknesses, and exploit opportunities introduced by model updates, configuration changes, or expanded capabilities.

Whether you're just getting started with AI Security Testing or looking to deepen your expertise, our engaging content is here to support you every step of the way.

Take the first step towards securing your AI. Book a demo now and we'll reach out to you.