A global development and financial institution needed to understand how real adversaries could iteratively probe, manipulate, and exploit AI systems

Fergal Glynn

The customer is a global semiconductor manufacturer operating at massive scale, supporting advanced research, design, and manufacturing operations worldwide. As part of its digital transformation, the organization deployed multiple AI-powered applications to support engineering workflows, internal knowledge access, and operational decision-making. These systems interacted with sensitive intellectual property, proprietary manufacturing data, and internal tooling that represented high-value targets for adversaries.

The security team faced a critical challenge: while AI risk was widely acknowledged internally, existing evaluation methods failed to demonstrate which risks actually mattered. Traditional AI safety testing produced large volumes of low-signal findings, such as benign content policy violations and generic prompt failures that did not map cleanly to real security outcomes. Model-centric assessments lacked context about how AI behavior could be chained with system integrations to enable data exposure, unauthorized actions, or downstream compromise.

Security leadership needed a way to identify and prioritize AI vulnerabilities that had material security consequences, not just theoretical weaknesses. They required clear evidence of exploitability that could be communicated to engineering teams and governance stakeholders, and that could justify remediation efforts in an environment where AI systems were evolving rapidly.

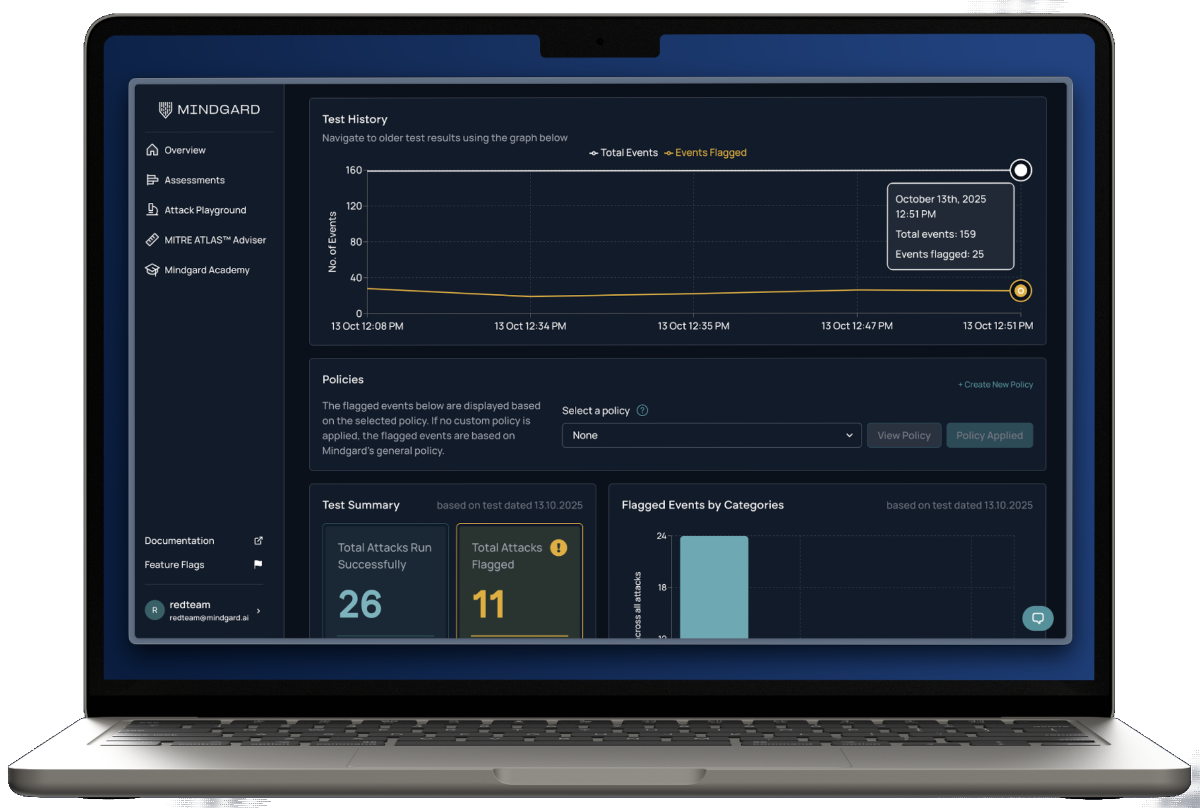

To address this gap, the organization deployed Mindgard to evaluate AI systems through the lens of real adversarial behavior and demonstrable security impact. Rather than focusing on isolated model responses, Mindgard assessed the full AI system, including model behavior, system prompts, guardrails, orchestration logic, and integrations with internal tools and data sources.

Mindgard began by performing automated reconnaissance to understand what an attacker could learn about the AI system. This included identifying behavioral traits, inferring system capabilities, and mapping how AI outputs influenced downstream actions. This reconnaissance phase established a clear view of the AI attack surface and highlighted where probabilistic behavior could be manipulated in meaningful ways.

Using this intelligence, Mindgard executed continuous AI red teaming designed to surface vulnerabilities with direct implications for confidentiality, integrity, and availability. Testing targeted exploit paths such as prompt injection leading to unauthorized data access, manipulation of structured outputs that influenced system behavior, and behavioral coercion that could cause AI-driven workflows to violate security boundaries.

Crucially, Mindgard filtered out noise. Findings were prioritized based on exploitability and business impact, ensuring that security teams focused on vulnerabilities that attackers could realistically weaponize. Each issue was documented with clear evidence showing how the vulnerability could be exploited, why it mattered from a security standpoint, and options to remediate the risk.

By adopting Mindgard, the organization achieved a step-change in how AI security risk was understood and managed.

First, the security team gained clear visibility into real vulnerabilities, not abstract concerns. Mindgard uncovered weaknesses that could enable exposure of proprietary data, misuse of internal tools, or unintended execution paths within AI-driven workflows. These findings demonstrated how AI could act as an attack vector into broader enterprise systems, validating security concerns with concrete evidence.

Second, the focus on demonstrable impact transformed remediation efforts. Engineering teams were able to prioritize fixes based on attacker-aligned evidence rather than theoretical risk scores. This reduced friction between security and product teams and accelerated remediation timelines, as the consequences of inaction were clearly understood.

Third, the organization established a repeatable, scalable process for AI security validation. Continuous testing ensured that new models, prompt changes, and workflow updates were evaluated automatically, preventing regressions and maintaining security posture as systems evolved.

Finally, Mindgard enabled security leadership to communicate AI risk effectively to stakeholders. Reports highlighted vulnerabilities that materially affected enterprise security, supporting informed decision-making at both technical and governance levels. This shifted AI security discussions away from speculative threats toward measurable, defensible risk management.

For this global semiconductor manufacturer, Mindgard delivered demonstrable AI security impact by cutting through noise and exposing the vulnerabilities that truly mattered. The result was stronger protection of intellectual property, improved confidence in AI-enabled systems, and a security program grounded in how attackers actually exploit AI in real-world environments.