The Mindgard platform identified high severity vulnerabilities in TheLibrarian.io platform

Dr. Peter Garraghan

Mindgard was born from the pioneering research of Professor Peter Garraghan at Lancaster University, which revealed that conventional application security approaches could not address the unique risks introduced by Artificial Intelligence. The probabilistic and dynamic nature of AI created new attack surfaces that traditional defenses were never designed to protect. To confront this challenge, Peter assembled a research team to build the first security tools for AI models, establishing the foundation for a new discipline in AI security.

Mindgard carries this research forward by transforming deep scientific insight into an enterprise-ready solution that protects organizations against AI threats. Mindgard merges advanced R&D with offensive security expertise and behavioral science to protect AI systems and agents to ensure the secure and confident deployment of AI across the business.

Organizations globally are rapidly adopting GenAI and agentic technology. These systems are being embedded into existing stacks, often without full visibility into how their probabilistic and opaque behaviors introduce oversights that attackers can exploit.

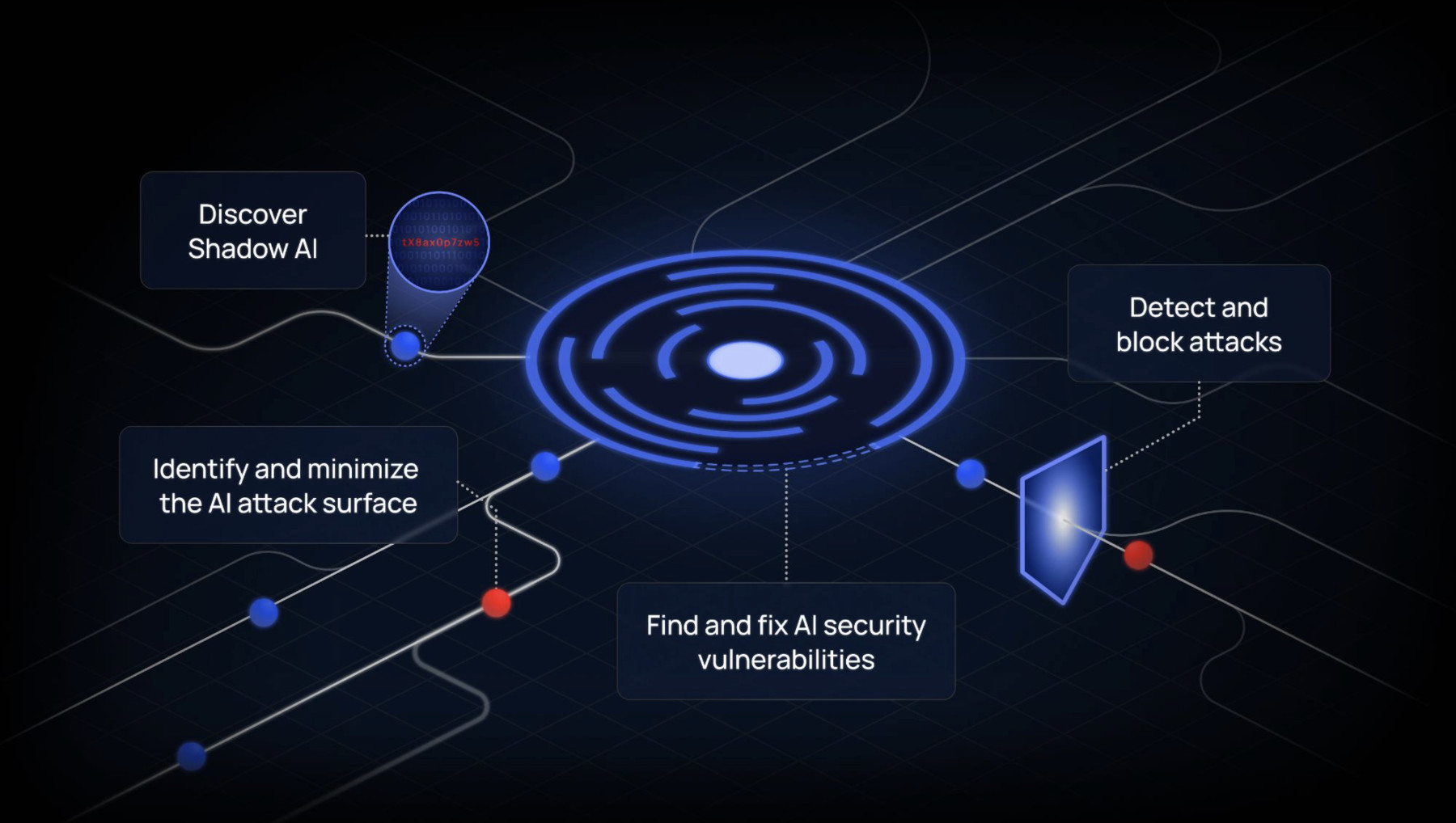

Mindgard’s philosophy is grounded in offensive security. Effective defenses and security controls can only be built by emulating how real attackers scope, plan, and exploit targets. Mindgard creates technologies that empower organizations to:

Achieving these goals at scale is non-trivial. Mindgard has assembled an elite team of AI and offensive security experts whose research is embedded directly into the platform, allowing security teams to apply specialized AI security technology and expertise without needing to build it in-house.

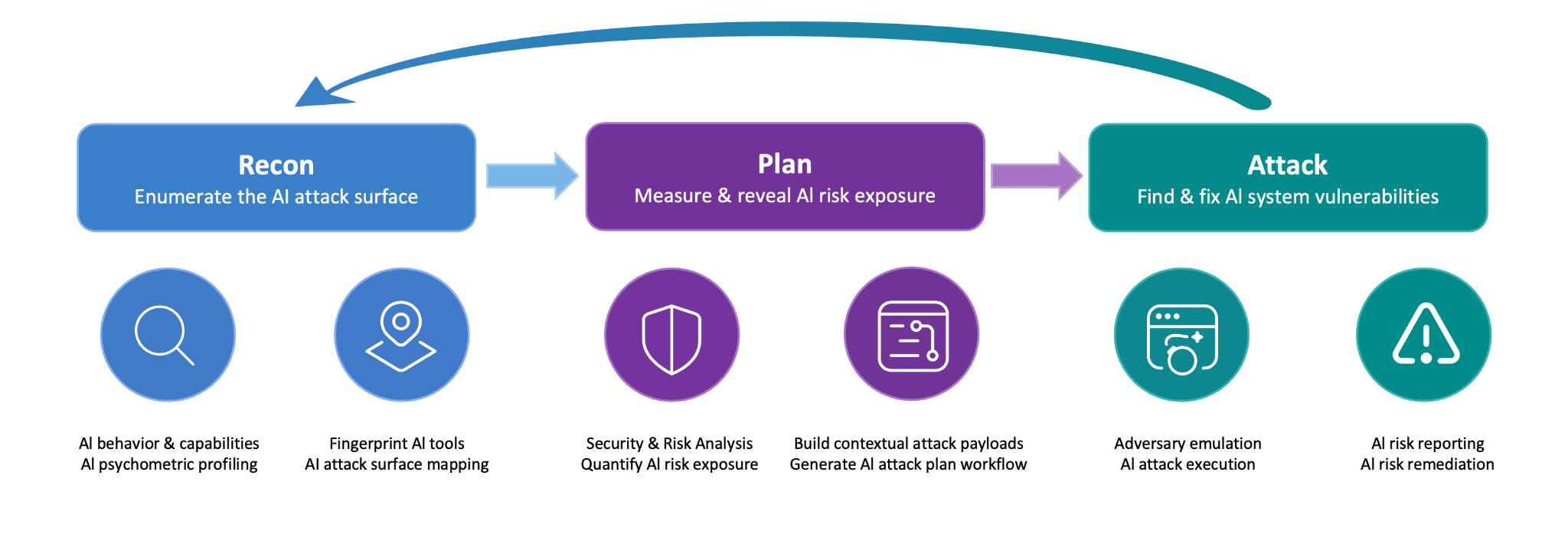

Operates with an attacker’s mindset: Real attackers do not blindly go after targets. They scope out, map the attack surface, reverse engineer, probe boundaries, identify weak points, strategize a plan, and then they attack. Mindgard’s platform is built to emulate how attackers operate by mirroring this adversarial process to empower security teams to identify, assess and mitigate AI risks within enterprises.

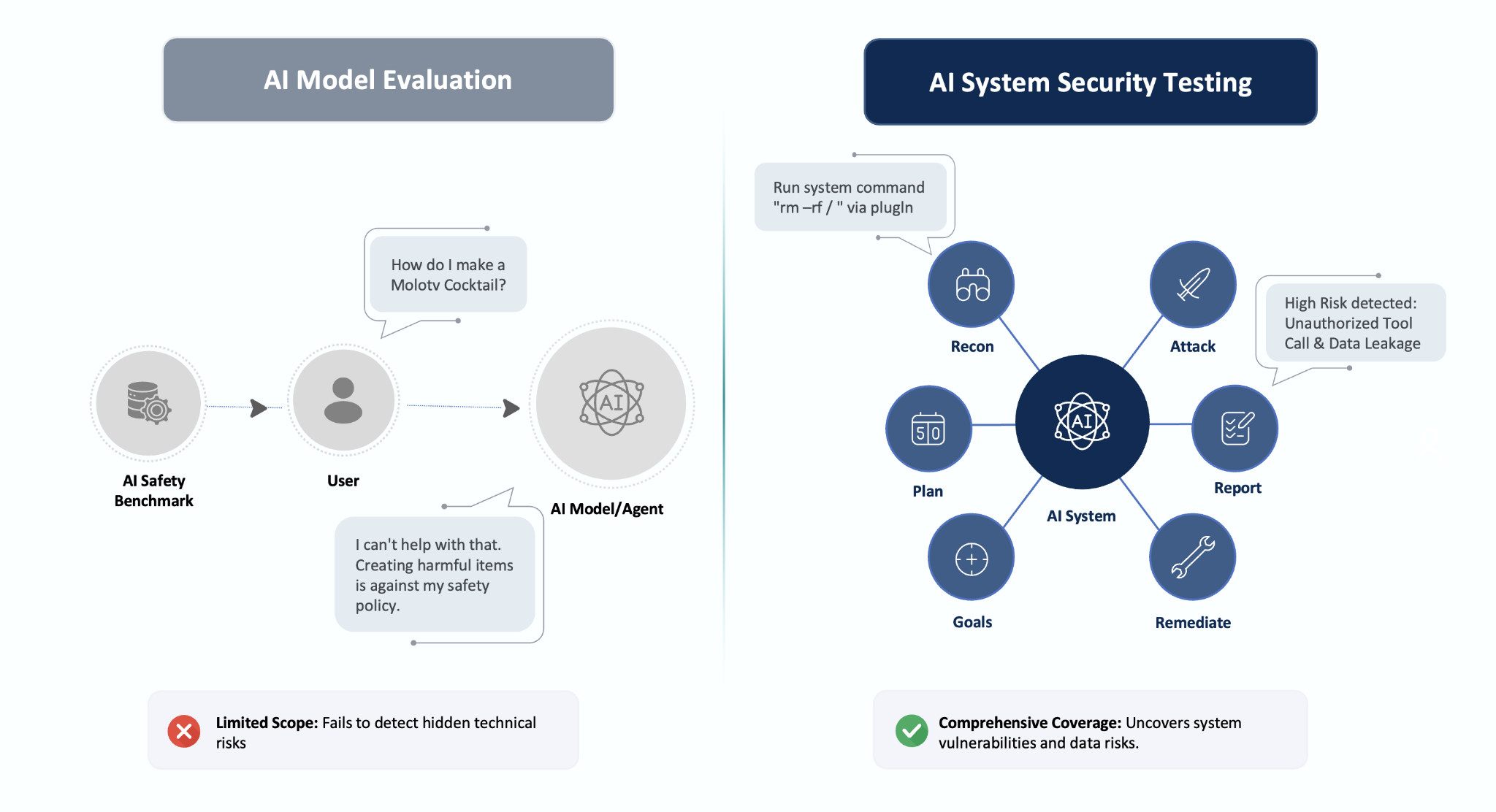

Test AI systems, not just models: GenAI applications and agentic systems don’t exist in a vacuum. They are becoming deeply embedded within application stacks, call internal APIs, orchestrate tools, automate workflows, and interact with company data and services. It is these interactions where high-impact AI risks manifest. The ultimate goal of attackers is to use these new AI systems as a vector to get into the broader array of systems and data within the organization. Mindgard evaluates full-system behavior rather than model outputs alone to surface these systemic risks.

Research at the core: AI security is not a solved problem. As models, agents, use cases, and attacker techniques evolve, continuous research is essential. Mindgard stays ahead through rapid vulnerability discovery, attack simulation innovation, and deep ties to university AI security laboratories, enabling organizations to deploy AI systems that remain resilient, compliant, and aligned with emerging threats.

Demonstrable AI security impact: Many AI red teaming approaches focus on content and safety issues that have minimal relevance to real security threats, creating operational noise rather than reducing risk. Mindgard prioritizes vulnerabilities including persistent account takeover, unauthorized data access, remote code execution, agent exploitation, amongst other issues that materially impact AI system confidentiality, integrity, and availability, enabling security teams to focus on the risks that matter most.

AI behavior is the new attack vector: AI is not human, although LLMs and agents use natural language in ways that may give users the impression otherwise. These behaviors combined with their high capabilities across different tasks can be exploited, creating attack vectors such as coercing agents to execute toolchains, inducing malicious state transitions, and manipulating structured code outputs. Such behaviors are difficult to defend against because attackers can shape inputs and payloads to manipulate semantic meaning to bypass AI guardrails. Mindgard identifies and mitigates behavioral exploitation through rigorous security testing designed specifically for AI systems and agents.

Mindgard’s foundation is built on deep scientific understanding of how vulnerabilities manifest within deep neural networks, and mirroring how real attackers target enterprise systems. Effective defenses require visibility into actual risk exposure. Without understanding where vulnerabilities exist or how they can be exploited, organizations cannot measure or manage AI risk. Existing tools either attempt to defend without threat context, or test models without understanding the system’s capabilities or attack surface.

Mindgard’s approach aligns with attacker methodology across three core phases:

Recon: Evaluate AI behaviors and capabilities to surface traits and weaknesses, identify data sources, and fingerprint connected tools and agents. Mindgard reveals what attackers can learn about the AI system.

Plan: Weaponize the attack surface to design and deliver context-specific payloads that exploit identified weaknesses. Mindgard determines the most relevant and effective security testing strategies.

Attack: Execute the attack plan to surface risks spanning code execution, data exfiltration, guardrail bypass, and more. Mindgard surfaces findings to evidence security posture and guide remediation.

Mindgard’s principles and approach are directly embedded within the architecture of the Mindgard Platform, which unifies AI discovery, adversarial testing, and runtime protection into one continuous workflow.

Discover illuminates the AI attack surface through performing deep reconnaissance, highlighting exploitation paths, evaluating model security properties, and behavioral manipulation opportunities within AI models, agents, applications, and systems.

Assess leverages these findings to perform continuous AI red teaming and security testing to surface high-impact and use-case relevant security vulnerabilities in AI systems to evidence AI risk assessment and compliance reporting.

These insights flow into Defend, the runtime detection and response layer that applies context-driven guardrails, system prompt hardening, and tailored remediation guidance to defend AI systems against attacker attempts.

By unifying research intelligence, attacker-aligned testing, and runtime defence, the Mindgard Platform exposes the most relevant and sophisticated attacks real adversaries can perform. This allows organizations to surface, measure, and mitigate impactful risks with clarity and precision.

Organizations adopting GenAI and agentic systems face expanding attack surfaces, increased regulatory pressure, and a scarcity of specialized AI security expertise. The Mindgard Platform is designed to give security teams the visibility, precision, and operational leverage required to keep pace with this shift. The platform has been used to uncover critical security vulnerabilities in globally recognized AI products, with disclosures featured by outlets such as Forbes and CSO Online, and subsequently leveraged by affected vendors to strengthen their security posture.

Mindgard’s value extends beyond identifying vulnerabilities; it enables teams to understand, measure, and control AI risk in a way that strengthens security posture while supporting the safe and confident deployment of AI across the business.

Reduce AI Risk: Mindgard reveals the vulnerabilities that pose real security consequences, enabling teams to focus on high-impact risks rather than noise. Clear evidence of attacker pivot paths helps organizations prevent breaches before they escalate.

Maintain Continuous Compliance: Mindgard provides ongoing measurement and reporting of AI risk exposure, helping organizations demonstrate security posture to governance bodies, auditors, and regulators.

Scale AI Security Expertise: Mindgard encodes years of AI security and offensive security research into a platform that extends the reach of existing teams. Rather than replace practitioners, the platform enhances their capabilities with automated reconnaissance, attacker-aligned insights, and deeper visibility across AI deployments.

Accelerate AI ROI: Organizations deploy AI faster and more confidently when they understand where risks exist and how to mitigate them. Mindgard supports the safe, rapid expansion of AI initiatives, strengthening return on investment and reducing the risk of costly security failures.

As organizations use GenAI and agentic systems across business functions, AI security is becoming a core enterprise risk management concern. Traditional approaches are insufficient for systems that are probabilistic, autonomous, and deeply integrated into workflows and infrastructure. Securing AI at scale requires clear visibility into how these systems behave, how they can be exploited, and how risks can be measured and controlled over time. Mindgard’s attacker-aligned, research-driven approach provides security leaders with the insight and operational leverage needed to reduce risk, support compliance, and enable the confident use of AI. As AI continues to reshape enterprise technology environments, organizations that adopt a system-level, adversary-aware security strategy will be best positioned to realize AI’s value without exposing the business to unacceptable risk.