Mindgard’s free tool lifts the lid on unknown and undetected AI cyber risks

As enterprises rapidly develop or adopt AI to gain competitive advantage, they are exposed to new attack vectors that conventional security tools cannot address.

- Mindgard’s AI Security Labs automates AI security testing and threat assessments currently being undetected by organisations due to lack of skills, time and money

- At zero cost, AI Security Labs enables cyber security assessments of a range of attacks against AI, LLMs, and GenAI.

- Demonstrates the potential AI security risks that AI presents to an organisation

- Assists engineers to learn more about AI security

LONDON (7 February, 2024) – Mindgard, the market-leading AI cybersecurity platform, today launched Mindgard's AI Security Labs, a free online tool for engineers to perform red teaming by evaluating the cyber risk to AI systems, including large language models (LLMs) like ChatGPT. As well as derisking a wide range of AI deployment scenarios, the tool marks a major advance in the cyber threat educational tools available to engineers.

As enterprises rapidly develop or adopt AI to gain competitive advantage, they are exposed to new attack vectors that conventional security tools cannot address. Even using so-called foundation models, such as ChatGPT, exposes them to risks as there have been no automated processes available until now to test the possible impacts of attacks.

Mindgard's AI Security Labs lifts the lid on exposure to ML attacks faced by model developers and user organisations alike. These risks are currently predominantly undetected due to the complexity of identification and the lack of the specialised skills needed. Current AI penetration tests – if they happen at all – require months of programming and testing by hard-to-find and highly expensive teams. Even where they do happen, any subsequent change in the AI stack, model or underlying data necessitates a completely new test. As a result, senior management is often completely unaware of the likely impact of any disruption.

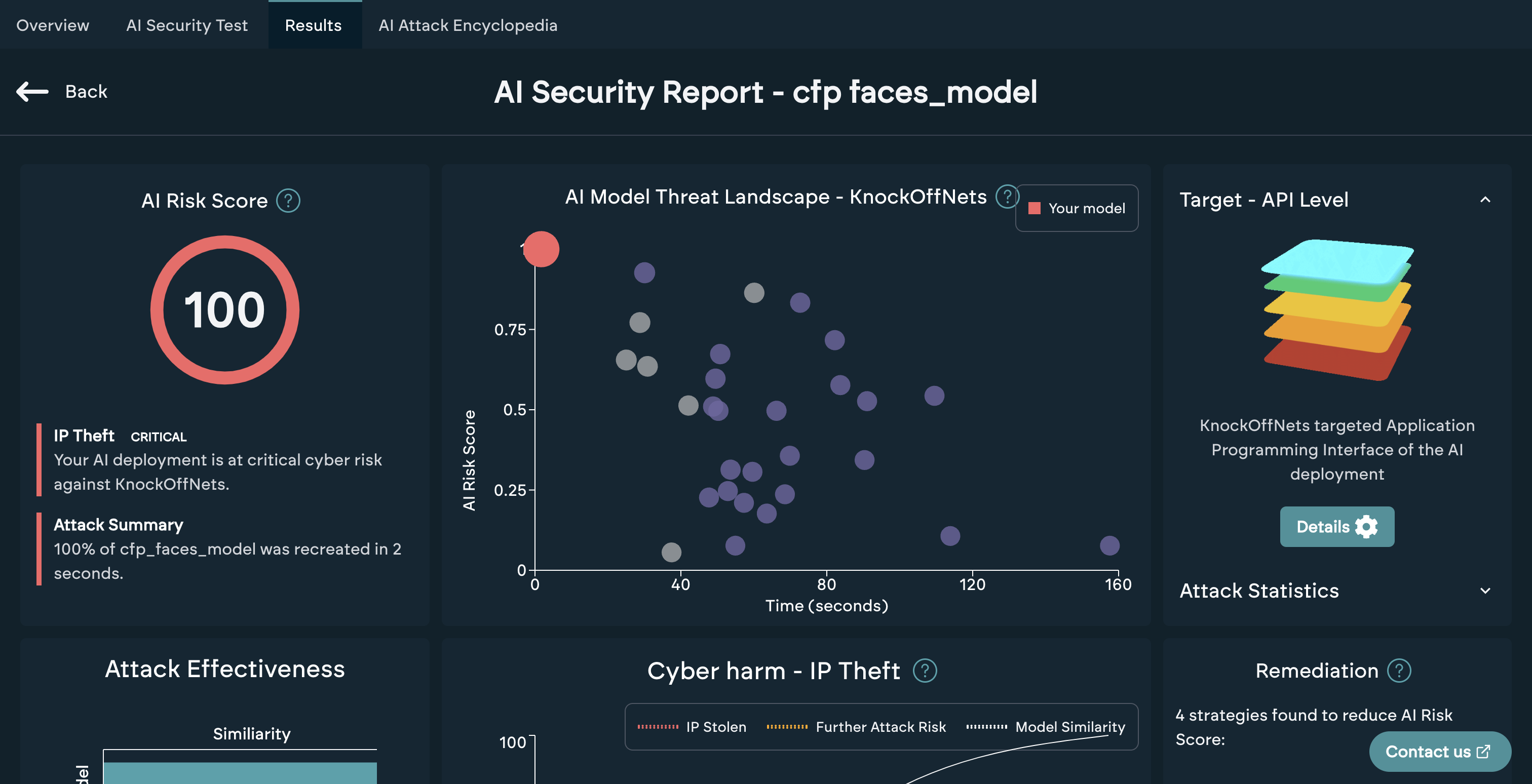

Mindgard's free AI Security Labs automates the threat discovery process, providing repeatable AI security testing and reliable risk assessment in minutes rather than months. It allows engineers to select from a range of attacks against popular AI models, datasets and frameworks to assess potential vulnerabilities. The results provide insight on what is the current “art of the possible” in AI attacks and the likelihood of evasion, IP theft, data leakage, and model copying threats.

"Most organisations are flying blind when deploying AI, with no way to perform red teaming against emerging cyber risks," said Dr. Peter Garraghan, CEO/CTO of Mindgard and Professor at Lancaster University. "Until now, there has been nowhere for technical teams to learn about the real threats to AI security. We created this free tool to empower engineers on the front lines of AI adoption with the education and capabilities to properly evaluate the attack surface."

After the phenomenally fast adoption of LLMs following the launch of ChatGPT 3.5, a range of possible attacks on AI systems has been starting to emerge. Data poisoning has been observed, where chatbots have been made to swear or produce anomalous results. Data extraction is another threat, where an LLM reveals, for example, the sensitive data on which it was trained. And copying entire AI/ML models is increasingly common: Mindgard’s AI security researchers demonstrated this by copying ChatGPT 3.5 in two days at a cost of just $50.

"Established cybersecurity tools are ineffective against AI's new threat landscape," said Dr. Peter Garraghan, CTO of Mindgard. "Our free offering bridges that gap by putting test capabilities into engineers' hands so they can properly secure AI before deployment."

Mindgard’s AI Security Labs is available via a simple online sign-up. No payment or credit card information are required. Key benefits of Mindgard's free AI cyber risk tool include:

- Able to conduct AI red teaming in over 170 unique attack scenarios.

- Assessment of cyber risk of leading LLMs such as Mistral

- Demonstration of jailbreaking, data leakage, evasion, and model copying attacks

- Easy selection of AI models, datasets and frameworks to be used in the AI attack scenario

- Detailed reports on AI cyber risk, and attack success rates

As well as immediate sign up from its website, Mindgard also plans to make their solution available on Azure marketplace in the coming months, with Google Cloud Platform (GCP) and Amazon Web Services (AWS) to follow.

About Mindgard

Mindgard is a deep-tech company specialising in cybersecurity for AI. Its flagship AI security platform is built upon years of world class R&D and used by top global enterprises to secure AI/ML models and data. Founded in 2022 at world-renowned Lancaster University, Mindgard is based in London, UK and backed by leading investors like IQ Capital and Lakestar. Learn more at https://mindgard.ai/

Mindgard in the news

-

Mindgard’s Dr. Peter Garraghan on TNW.com Podcast / May 2024Listen to the full episode at TNW.COM

"We discussed the questions of security of generative AI, potential attacks on it, and what businesses can do today to be safe."

-

Mindgard’s Dr. Peter Garraghan in Businessage.com / May 2024READ FULL ARTICLE AT businessage.com

"Even the most advanced AI foundation models are not immune to vulnerabilities. In 2023, ChatGPT itself experienced a significant data breach caused by a bug in an open-source library."

-

Mindgard’s Dr. Peter Garraghan in Finance.Yahoo.com / April 2024READ FULL ARTICLE AT finance.yahoo.com

"AI is not magic. It's still software, data and hardware. Therefore, all the cybersecurity threats that you can envision also apply to AI."

-

Mindgard’s Dr. Peter Garraghan in Verdict.co.uk / April 2024Read full article at verdict.co.uk

"There are cybersecurity attacks with AI whereby it can leak data, the model can actually give it to me if I just ask it very politely to do so."

-

Mindgard in Sifted.eu / March 2024Read full article at sifted.eu

"Mindgard is one of 11 AI startups to watch, according to investors."

-

Mindgard’s Dr. Peter Garraghan in Maddyness.com / March 2024Read full article at maddyness.com

"You don’t need to throw out your existing cyber security processes, playbooks, and tooling, you just need to update it or re-armor it for AI/GenAI/LLMs."

-

Mindgard’s Dr. Peter Garraghan in TechTimes.com / October 2023Read full article at Techtimes.com

"While LLM technology is potentially transformative, businesses and scientists alike will have to think very carefully on measuring the cyber risks associated with adopting and deploying LLMs."

-

Mindgard in Tech.eu / September 2023Read full article at tech.EU

"We are defining and driving the security for AI space, and believe that Mindgard will quickly become a must-have for any enterprise with AI assets."

-

Mindgard in Fintech.global / September 2023READ FULL ARTICLE AT fintech.global

"With Mindgard’s platform, the complexity of model assessment is made easy and actionable through integrations into common MLOps and SecOps tools and an ever-growing attack library."